Eine der häufigen Anforderungen in der APEX Entwicklung ist es, einen änderbaren Report (Tabular Form) anzulegen bei dem einzelne Spaltenwerte verändert werden dürfen. Dies funktioniert in 90% der Fälle sehr gut. In manchen Fällen ist der Standardmechanismus leider nicht die 100% Lösung. Wenn Sie besonders viele LOV Spalten in Ihrem änderbaren Report verwenden, dann kann dies zu Performance Problemen führen. Genau auf dieses Problem gehen wir in diesem Blogeintrag näher ein.

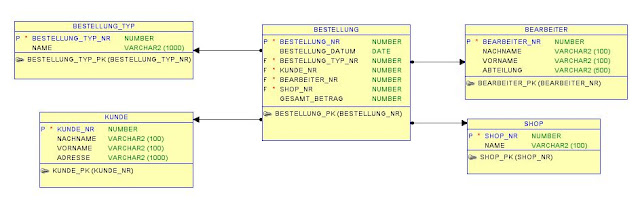

In unserem Beispiel beziehen wir uns auf eine Bestelltabelle und in dieser darf die Spalte GESAMT_BETRAG nachträglich editiert werden. :)

Die Tabelle besteht der Einfachheit halber aus nur 4 Stammdatentabellen und einer Bestelltabelle.

(Um die langen Ladezeiten bei sich zu verursachen, müssen Sie wahrscheinlich noch ein paar mehr LOV Spalten in Ihrem Tabular Form haben.)

![Datenmodell]()

Der Standardweg ein Tabular Form in APEX aufzubauen läuft nun wie folgt.

1. Anlegen eines Tabular Form auf Basis der Tabelle BESTELLUNG

(Create Region > Create Form > Create Tabular Form)

(Column Attributes > Display As: "LOV Type")

Wenn Sie nun Ihren Report ausführen und um die 200 Datensätze ausgeben, kann es leicht zu erhöhten Wartezeiten

kommen. Grund? Jede LOV wird je Datensatz ausgeführt. Dies ist gut im Debugmodus ersichtlich.

Die Alternative ist eine View zu verwenden und diese änderbar zu konfigurieren.

Damit die View versteht wohin gespeichert werden soll, muss ein INSTEAD OF Trigger definiert werden:

In unserem Beispiel beziehen wir uns auf eine Bestelltabelle und in dieser darf die Spalte GESAMT_BETRAG nachträglich editiert werden. :)

Die Tabelle besteht der Einfachheit halber aus nur 4 Stammdatentabellen und einer Bestelltabelle.

(Um die langen Ladezeiten bei sich zu verursachen, müssen Sie wahrscheinlich noch ein paar mehr LOV Spalten in Ihrem Tabular Form haben.)

Der Standardweg ein Tabular Form in APEX aufzubauen läuft nun wie folgt.

1. Anlegen eines Tabular Form auf Basis der Tabelle BESTELLUNG

(Create Region > Create Form > Create Tabular Form)

SELECT BESTELLUNG_NR, BESTELLUNG_DATUM, BESTELLUNG_TYP_NR,2. Statt einer Menge FK IDs anzuzeigen, wird bei jeder FK Spalte eine LOV hinterlegt

KUNDE_NR, BEARBEITER_NR, SHOP_NR, GESAMT_BETRAG

FROM BESTELLUNGEN

(Column Attributes > Display As: "LOV Type")

Display as: Display as Text (based on LOV, does not save state)Dies wird bei allen anderen FK Spalten wiederholt.

Beispiel: BEARBEITER_NR

SELECT nachname || ', ' || vorname as d,

bearbeiter_nr as r

FROM bearbeiter

Wenn Sie nun Ihren Report ausführen und um die 200 Datensätze ausgeben, kann es leicht zu erhöhten Wartezeiten

kommen. Grund? Jede LOV wird je Datensatz ausgeführt. Dies ist gut im Debugmodus ersichtlich.

Die Alternative ist eine View zu verwenden und diese änderbar zu konfigurieren.

-- View DDLIn unserem Beispiel soll nur der Gesamtbetrag nachträglich änderbar bleiben.

CREATE OR REPLACE VIEW VW_BESTELLBESTAETIGUNG AS

SELECT B.ROWID AS ROW_ID, B.BESTELLUNG_NR, B.BESTELLUNG_DATUM,

BT.NAME as BESTELLUNG_TYP, B.BESTELLUNG_TYP_NR,

K.NACHNAME || ', ' || K.VORNAME as KUNDE, B.KUNDE_NR,

BA.NACHNAME || ', ' || BA.VORNAME as BEARBEITER, B.BEARBEITER_NR,

S.NAME as SHOP, B.SHOP_NR,

B.GESAMT_BETRAG

FROM BESTELLUNGEN B, BESTELLUNG_TYP BT, KUNDE K, BEARBEITER BA, SHOP S

WHERE B.SHOP_NR = S.SHOP_NR

AND B.BEARBEITER_NR = BA.BEARBEITER_NR

AND B.KUNDE_NR = K.KUNDE_NR

AND B.BESTELLUNG_TYP_NR = BT.BESTELLUNG_TYP_NR

-- Neues Tabular Form Select

SELECT BESTELLUNG_NR, BESTELLUNG_DATUM, BESTELLUNG_TYP, KUNDE,

BEARBEITER, SHOP, GESAMT_BETRAG

FROM VIEW_BESTELLUNGEN

Damit die View versteht wohin gespeichert werden soll, muss ein INSTEAD OF Trigger definiert werden:

CREATE OR REPLACE TRIGGER VIEW_BESTELLUNGEN_IOUInfo: Wenn wir eine LOV Spalte ändern wollten, dann wäre eine definierte LOV die bessere Lösung.

INSTEAD OF UPDATE

ON VIEW_BESTELLUNGEN

REFERENCING NEW AS new OLD AS old

FOR EACH ROW

BEGIN

UPDATE

BESTELLUNGEN

SET GESAMT_BETRAG = :new.gesamt_betrag

WHERE ID = :old.id;

EXCEPTION WHEN OTHERS THEN

-- Please, do some error handling and allow me

-- to skip this part for this time...

RAISE;

END VIEW_BESTELLUNGEN_IOU;